The illusion of evidence-based nudges

From a recent Journal of Political Economy paper by Stefano DellaVigna, Woojin Kim and Elizabeth Linos (2024):

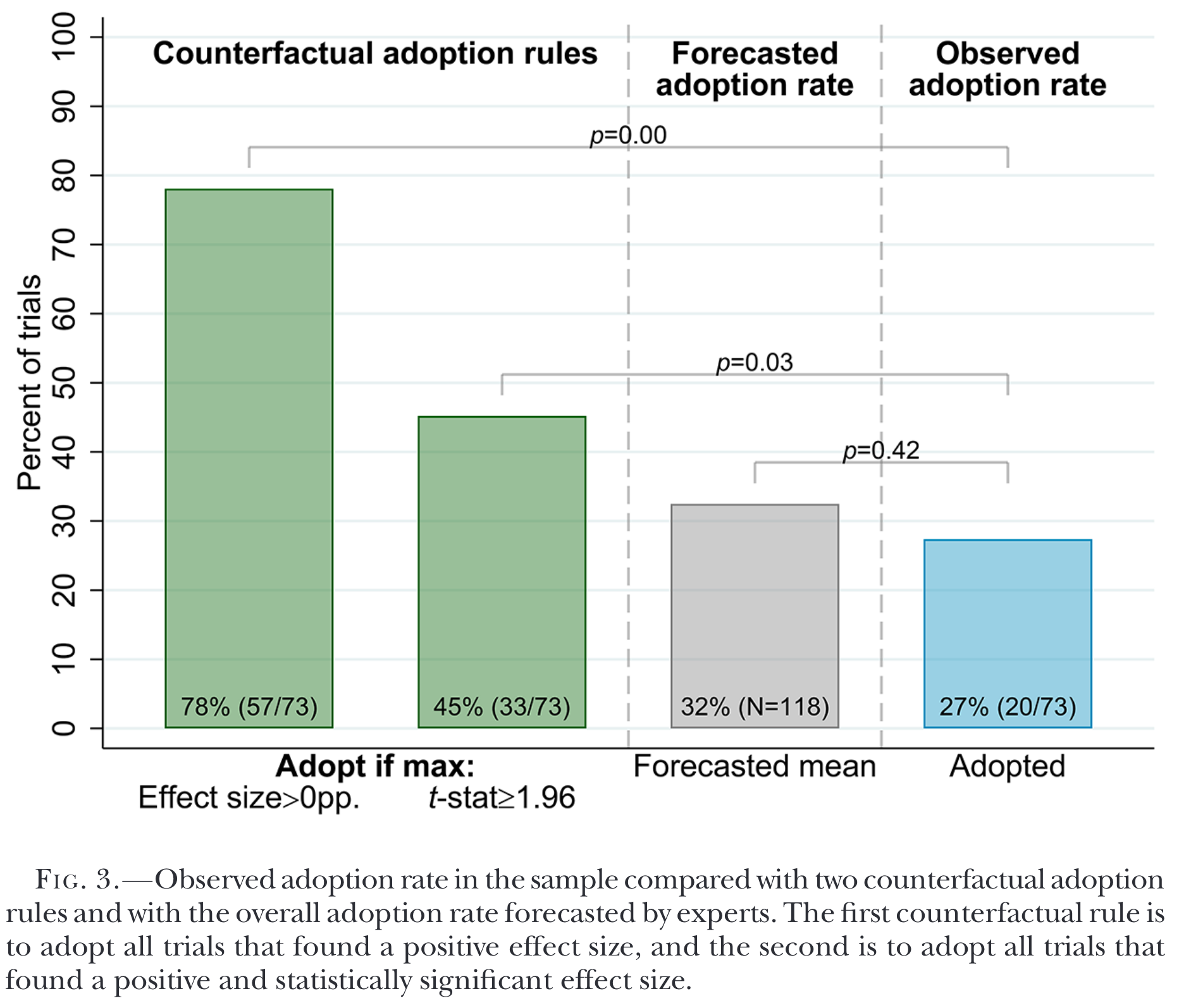

We study 30 US cities that ran 73 RCTs with a national nudge unit. Cities adopt a nudge treatment into their communications in 27% of the cases. We find that the strength of the evidence and key city features do not strongly predict adoption; instead, the largest predictor is whether the RCT was implemented using preexisting communication, as opposed to new communication.

A nudge with a negative result is almost as likely to be implemented as a positive result.

There is no difference in adoption for results with negative point estimates (25% adoption), results with positive but not statistically significant estimates (25%), and estimates that are positive and statistically significant (30%). The likelihood of adoption increases with effect size (measured in percentage points), from 17% in the bottom third to 38% in the top third, though this difference is not statistically significant at conventional levels.

My cynical take is that running trials with nudge units is cool. Despite more than a decade of nudge unit stories, behavioural insights is still a “shiny new thing” and are a way to say “we’re doing science” The hard work of implementing or scaling an intervention simply isn’t as sexy.